BlueOptima has always been at the forefront of innovating software development metrics. In our latest project, we set out to address one of the biggest challenges in AI development—rising cloud compute costs—by engineering a cost-effective solution that delivers one PetaFLOP of FP32 precision compute power. In this article, we walk you through the journey of our innovation, detailing the challenges, the breakthrough design, and the significant cost benefits achieved.

The Challenge: Managing Soaring Compute Demands

In today’s fast-paced world of AI development, compute demands are rapidly increasing. For BlueOptima’s state-of-the-art Large Graph Model—which performs in-depth source code analysis—providing massive compute power is not a luxury; it’s a necessity. However, relying solely on traditional cloud services for high-precision tasks (such as FP32 operations) can quickly lead to prohibitive costs.

Key challenges included:

- Rising Cloud Compute Costs: Traditional cloud environments come with steep price tags that can balloon operational budgets.

- High Compute Requirements: Our Large Graph Model required extensive FP32 precision compute power, making cost efficiency a vital concern.

- Non-Production Environment Considerations: The innovation was developed for a non-production AI development environment, emphasizing the need for experimental yet robust solutions.

The Innovation: Compact, Powerful, and Economical

Instead of accepting the high costs associated with conventional data center hardware, our engineering team designed a revolutionary solution. The new system features a compact 11U server that packs a powerful punch:

- Dual 128-Core CPU Configuration: Two high-performance compute nodes work in tandem.

- Massive Memory and GPU Power: Each node is loaded with 1TB of RAM and is equipped with six consumer-grade GPUs.

- Optimized Hardware Architecture: Our design employs dual consumer-grade GPU configurations—specifically 10 NVIDIA RTX 4090s and 2 NVIDIA 3090 TI GPUs—combined with a state-of-the-art memory sharing architecture that operates at bus speed without the need for NVLink.

This breakthrough setup delivers over 1,000 TFLOPS of FP32 compute performance. Our innovative use of consumer-grade hardware and 3D-printed components not only ensured top-tier performance but also resulted in significant cost savings compared to traditional data center hardware.

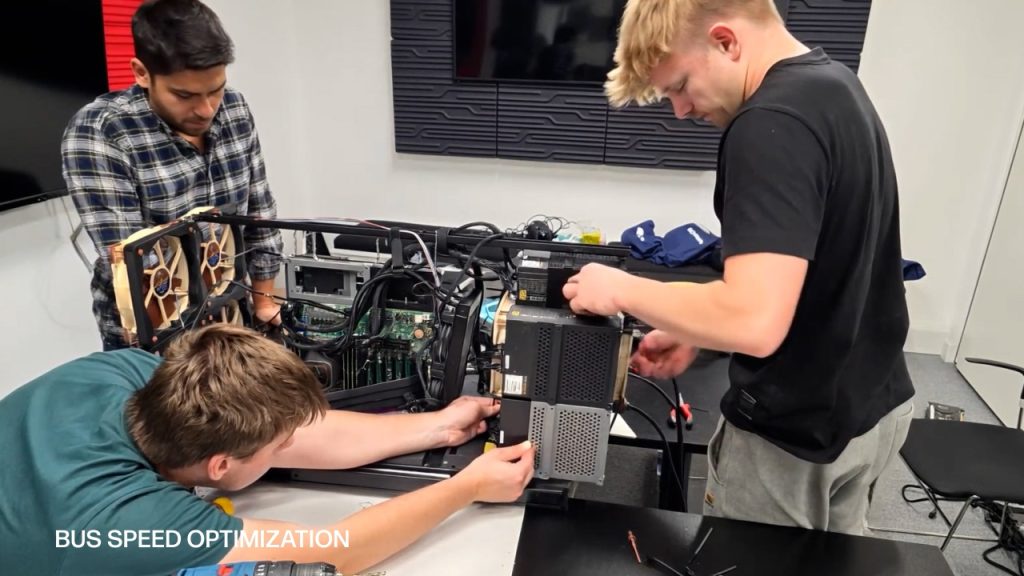

The Build Process: Engineering Excellence in Action

The journey from concept to execution involved a blend of creative engineering and precise manufacturing. Here’s how we brought our vision to life:

- Rapid Prototyping and 3D Printing: Custom components were fabricated using advanced 3D printing, ensuring that every part of the system met our exacting standards.

- Consumer-Grade Hardware Innovation: By leveraging the power of consumer-grade GPUs, we built a system that not only met the required 1 PetaFLOP benchmark but did so at a fraction of the price of a conventional data center solution.

- Thermal Management and Workload Optimization: Enhanced cooling infrastructure and intelligent workload management were integral to maintaining optimal performance. Our system manages base AI compute needs in-house while relying on cloud services only for overflow demand.

Cost Comparison: Delivering More for Less

A key highlight of this project is the dramatic cost savings achieved. Our approach was benchmarked against conventional data center hardware, and the numbers speak for themselves:

Consumer-Grade vs. Data Center Hardware

| Feature | NVIDIA RTX 4090 | NVIDIA A100 |

|---|---|---|

| Architecture | Ada Lovelace | Ampere |

| Compute Performance (FP32) | ~83 TFLOPS per GPU | ~19.5 TFLOPS per GPU |

| Total Compute Performance | 996 TFLOPS (12 GPUs) | 234 TFLOPS (12 GPUs) |

| Memory per GPU | 24 GB GDDR6X | 40–80 GB HBM2e |

| Cost per GPU | ~$1,600 | ~$15,000 |

| Total Cost (12 GPUs) | ~$19,200 | ~$180,000 |

| Cost per TFLOP (FP32) | ~$19.28 | ~$769.23 |

Total Solution Cost Comparison

| Metric | RTX 4090 Solution (12 GPUs + CPUs) | A100 Solution (12 GPUs + CPUs) |

|---|---|---|

| Total TFLOPS | 1,000 | 238 |

| Total Cost (USD) | $55,200 | $216,000 |

| Cost per TFLOP (USD) | $55.20 | $907.56 |

The tables above clearly demonstrate that our consumer-grade solution delivers more than four times the compute performance at just a fraction of the cost compared to traditional data center hardware. With over 80% cost savings on a per-TFLOP basis, BlueOptima’s innovation not only meets the compute requirements but also revolutionizes the economics of AI development.

Practical Implementation: In-House Compute Meets Cloud Scalability

The benefits of this breakthrough extend beyond raw performance:

- In-House Compute Efficiency: By handling the base AI compute in-house, BlueOptima can maintain strict control over workloads and optimize resource allocation.

- Cloud as an Overflow Option: Intelligent workload management ensures that cloud resources are used only when necessary, further optimizing costs without compromising performance.

- Real-Time Monitoring and Automation: Features like a real-time TFLOP counter, cost comparison tickers, and thermal management dashboards provide constant insight into system performance—keeping operations efficient and transparent.

Conclusion: A New Era of Cost-Effective AI Innovation

Through innovative engineering and strategic hardware choices, BlueOptima has not only overcome the challenges posed by surging compute demands but has done so in a way that makes advanced AI development more accessible and affordable. This compact 11U solution with dual 128-core CPUs, massive memory, and a cutting-edge multi-GPU configuration sets a new standard in delivering PetaFLOP-level FP32 precision compute power.

Our approach redefines how organizations can build high-performance compute systems while dramatically reducing costs. BlueOptima invites you to explore how this breakthrough is paving the way for more efficient, scalable, and cost-effective AI development environments.

Watch the video below:

Related articles...

Article

The Triple Threat of Breaches: Secrets, SCA, and SVD in the Software Development Lifecycle

In enterprise cybersecurity, some of the most costly and preventable…

Read More

Article

Inside the $55 Billion Breach Puzzle: What Data Breaches Really Cost Enterprises

When thinking about the financial impact of cybersecurity breaches, the…

Read MoreArticle

Debunking GitHub’s Claims: A Data-Driven Critique of Their Copilot Study

Generative AI (GenAI) tools like GitHub Copilot have captured the…

Read More

Bringing objectivity to your decisions

Giving teams visibility, managers are enabled to increase the velocity of development teams without risking code quality.

out of 10 of the worlds biggest banks

of the S&P Top 50 Companies

of the Fortune 50 Companies