As famous management consultant Peter Drucker once said, “if you can’t measure it, you can’t improve it.”

Measuring DevOps metrics is essential for identifying how your team can more efficiently and effectively deliver and develop applications. After all, without metrics, it’s impossible to know where to focus your attention or how you can make the most significant impact.

As the longest-running academic research programme, representing over six years of research and 31,000 data points, the DevOps Research and Assessment (DORA) helps companies achieve the DevOps philosophy of speed and stability by identifying the four key traits and capabilities of elite teams.

Join us as we explore DORA metrics, including why they matter and what they tell us about an organisation’s DevOps. We’ll also explore some of the challenges and how companies can drill down deeper to create effective change.

What Are DORA Metrics?

At the most fundamental level, DORA metrics help companies understand the actions required to quickly and reliably deliver and develop technological solutions.

The DORA framework essentially looks at four key metrics divided across the two core areas of DevOps. Deployment Frequency and Mean Lead Time of Changes are used to measure DevOp speed, while Change Failure Rate and Mean Time to Recovery are used to measure stability.

Depending on how companies score within each of these areas, they’re then classified as elite, high performers, medium performers or low performers.

Four Key DORA Metrics

1) Deployment Frequency

Deployment frequency looks at how often an organisation deploys code to production or releases to end-users. Elite teams have an on-demand deployment frequency while low performers have a deployment frequency of only once a month or once every six months.

Within the DevOps world, you want to strive for “continuous” development and this in itself implies a high deployment frequency. Being able to deploy on demand allows you to receive consistent feedback and deliver value to end users more quickly.

Deployment frequency can differ by organisation and across teams depending on what qualifies as a successful deployment.

2) Mean Lead Time for Changes

The lead time for changes is essentially how long it takes a team to go from code committed to code successfully running in production. Elite teams can complete this process in less than one day, while for low performers this process can take anywhere between one and six months.

Since change lead time also takes into account cycle times, this metric helps you understand if your cycle time is efficient enough to handle a high volume of requests and prevent your team from becoming snowed under by requests or delivering poor user experiences. However, it is important to note that shorter Lead Time to Change is not related to being more productive, as outlined by this paper that analyzes over 600,000 developers.

Companies often experience longer lead times due to cultural processes like separate test teams, projects running with different test phases, shared test environments, complicated routes to live, etc that have the potential to slow a team down.

The definition of lead time for change can also vary widely, which often creates confusion within the industry.

3) Change Failure Rate

Change failure rate is the percentage of changes that resulted in degraded services, like service impairment or outage, and need to be fixed. Change failure rates essentially highlight the efficiency of your deployment process.

Interestingly, elite, high and medium all have a change failure rate of 0-15% while low performers have a change failure rate of 40-65%.

The gold standard is to have a development process that’s completely automated, consistent and reliable. Without an automated and established process, teams are likely to experience high change failure rates as they’ll need to make lots of small changes.

Since the definition of failure can vary widely from simply running the script to starting deployment, this metric can be difficult to quantify.

4) Time to Recovery

Time to recovery or time to restore service essentially measures how long it takes a DevOps team to restore service when a service incident or a defect impacts customers; for example, an unplanned outage or service impairment.

This metric is essential for making sure you can recover quickly from any incidents. Elite performers can recover services in less than one hour, while low performers require one week to one month. Elite teams achieve quick MTTR metrics by deploying in small batches to reduce risk and having good monitoring tools to preempt failure.

It can be difficult to measure time to restore as this metric can differ between systems.

Why Use DORA Metrics?

As a proven and widely accepted methodology, DORA metrics are a useful starting point for understanding your company’s current delivery levels and how you compare to others in the industry. While DORA metrics are relevant for any company delivering or developing software, it’s most commonly used within technology, financial services and retail/ecommerce industries.

According to the most recent State of DevOps report, elite performers have recently grown to now represent 20% of survey respondents. High performers represent 23%, medium performers represent 44%, and low performers only represent 12%.

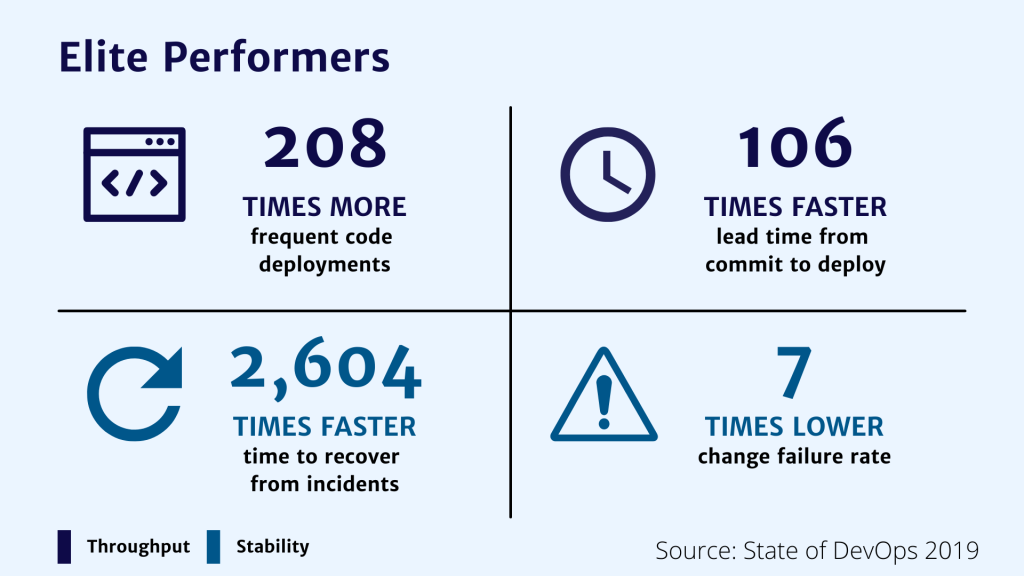

Becoming an elite performer can positively impact the business and end-user. For example, The State of DevOps report shows that elite performers are typically:

DORA metrics can also help organisations:

- Measure software delivery throughout and stability to understand how teams can improve.

- Make data-based decisions rather than relying on gut instinct.

- Create trust within an organisation, which decreases friction and allows for quicker, higher quality delivery.

- Learn more about the finer details of your organisation’s DevOps and identify ways to improve.

Challenges of DORA Metrics

While DORA metrics are a good starting point, this approach is survey-based which makes it challenging to pinpoint the specific changes you need to make or customise for your organisation.

Metrics can also vary widely between organisations/departments as different people use different definitions and measurement tools/protocols. DORA metrics are also often dependent on specific applications rather than on team leads or structures.

As a result, most organisations use DORA to get a top-level overview but then turn to others, like BlueOptima, to drill down deeper and identify the specific ways to improve productivity.

BlueOptima helps companies take their DORA assessment a step further by conducting strategic analysis and gathering insights at a root level. We analyse code revision across a core base of 36 metrics to determine exact productivity levels on a scale of 1 to 5.

Examining team leads and structures allows for a more specific analysis that uncovers the best, most cost-effective ways to create positive change. On an ongoing basis, this also allows you to determine whether your investment is having the intended impact.

Find out more about BlueOptima metrics, how we help teams measure productivity and our process here.

Related articles...

Article

Navigating Vendor Handovers: How to Manage Code Complexity and Improve Quality

Introduction Transitioning software projects from external vendors to internal teams…

Read MoreArticle

The Impact of Software Anti-Patterns in Vendor Transition

Introduction Vendor transitions in software development often introduce hidden risks…

Read MoreArticle

Secrets Detection: Building a Secure Software Development Lifecycle in 2025

The risks of mismanaging secrets have never been higher. Passwords,…

Read More

Bringing objectivity to your decisions

Giving teams visibility, managers are enabled to increase the velocity of development teams without risking code quality.

out of 10 of the worlds biggest banks

of the S&P Top 50 Companies

of the Fortune 50 Companies