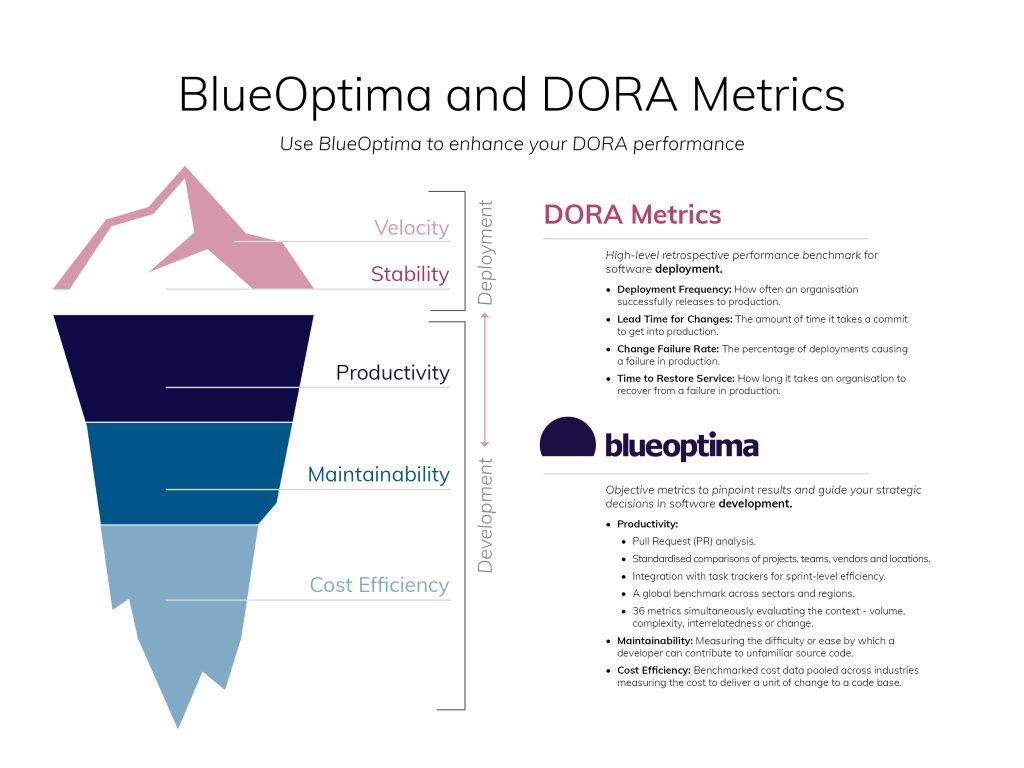

DORA is a widely used framework for measuring performance, but does it give the complete picture?

Since their launch in 2016, DORA has become one of the leading tools for measuring DevOps team’s performance and identifying areas for improvement. The four main metrics used by DORA are deployment frequency, lead time for changes, change failure rate, and mean time to recover. These metrics can provide valuable insight into the effectiveness, efficiency, and reliability of a team’s software development and delivery process.

In this article, we look at some of the pro’s and con’s of using DORA and why organisations should always use the metrics as part of a wider analytics stack to ensure that a balanced analysis is delivered.

The pro’s:

- DORA metrics can help teams identify areas of improvement in their processes and make informed decisions about how to optimise their workflow.

- They also provide objective data that can be used to compare different teams or departments within an organisation.

- This data can be used to benchmark performance and track progress over time.

The con’s:

- They may not take into account all the factors that contribute to a successful software delivery process.

- These metrics may not be able to accurately reflect the complexity of certain tasks or projects.

Digging a bit deeper into the pro’s:

DORA metrics can help teams identify areas of improvement in their processes and make informed decisions about how to optimise their workflow.

When used properly, DORA metrics help DevOps teams identify any bottlenecks or issues that might be slowing down their processes. This data can then be used to inform decisions about where to add additional resources or make changes in order to improve efficiency. Additionally, using a combination of these metrics can help teams establish benchmarks and compare their performance over time. This helps identify areas of improvement and whether any changes have been successful in addressing those issues.

DORA metrics can be used to compare different teams or departments within an organisation

DORA metrics can be used to compare different teams or departments within an organisation, which provides an objective and data-driven way to benchmark performance. By tracking key metrics such as deployment frequency, lead time for changes, change failure rate, and mean time to recover, teams can see how their performance stacks up against other departments or teams. This allows them to identify areas where they might need to focus more attention in order to improve their efficiency or effectiveness.

DORA metrics can be used to benchmark performance and track progress over time

Using DORA metrics also allows teams to track their progress over time. This is especially useful when making decisions about how best to optimize their workflow as teams can use the data collected by these metrics to measure the impact of any changes they may have implemented. Furthermore, being able to quickly and easily compare the performance of different teams can help identify areas where additional resources may be needed or further investigation is warranted.

Where DORA metrics can fall short

DORA metrics may not take into account all the factors that contribute to a successful software delivery process

While DORA metrics can be incredibly useful in terms of tracking and comparing team performance, it is important to note that they may not take into account all the factors that contribute to a successful software delivery process. For example, these metrics do not measure aspects such as customer satisfaction with the product or quality assurance. Additionally, these metrics may not capture the actual work being done behind the scenes – such as developing features and fixing bugs – which can also have an impact on delivery timelines and success.

Furthermore, DORA metrics don’t always provide a complete picture of how teams are performing. While they may offer data-driven insights into areas for improvement, this data does not always paint an accurate picture when it comes to more qualitative factors such as employee morale or overall team dynamics.

DORA metrics may not be able to accurately reflect the complexity of certain tasks or projects

This is because these metrics measure key data points such as deployment frequency and lead time, which are more applicable to smaller, simpler tasks or projects. When looking at more complex operations involving multiple teams, processes and archetypes, these metrics may not provide an accurate reflection of the amount of time, energy and resources put into these tasks or projects.

Furthermore, DORA metrics also do not take into account external factors that can have an effect on project outcomes. For example, while deployment frequency and lead time are important metrics in terms of gauging project progress, they don’t take into account external factors such as market conditions or customer expectations. As such, relying solely on DORA metrics may result in a skewed interpretation of a project’s success or failure.

DORA metrics can be manipulated and are not a representative suggestion of productivity

While popular for assessing software development productivity, DORA metrics are scrutinized for their limitations in our latest whitepaper. This study, analyzing over 600,000 developers across 30 enterprises, finds no direct link between faster LTTC and improved coding output or quality. It suggests that prioritizing release speed can negatively affect software development teams’ performance. The paper advocates for a more balanced approach, recommending additional metrics that focus on code quality and productivity to truly assess performance. For a detailed understanding, you can access the full paper here.

In conclusion…

DORA metrics can be a powerful tool for businesses when used to compare teams and departments within an organisation. The objective data they provide shows key performance indicators such as deployment frequency, lead time for changes, change failure rate, and mean time to recover. These metrics are invaluable for understanding the efficiency of a software development and delivery process. They can also be used to identify areas of improvement and track progress over time. However, they are not a good representation of productivity measures.

The danger of only relying on DORA metrics is that they end up a victim of Goodhart’s Law, losing their value as a meaningful metric as reliability and velocity take priority in development teams, losing the key area of productivity and adding value.

BlueOptima’s Developer Analytics

With Developer Analytics, IT leaders gain deeper insights into their software development processes, allowing them to hone in on where there may be blockers to productivity, teams introducing significant technical debt, or great new best practices from exceptional teams.

Related articles...

Article

Inside the $55 Billion Breach Puzzle: What Data Breaches Really Cost Enterprises

When thinking about the financial impact of cybersecurity breaches, the…

Read More

Article

How Software Quality Metrics Boost Team Performance

Measuring software delivery speed has become second nature for many…

Read More

Article

The Real Price of Technical Debt

For understandable reasons, businesses often prioritise speed over software quality….

Read More

Bringing objectivity to your decisions

Giving teams visibility, managers are enabled to increase the velocity of development teams without risking code quality.

out of 10 of the worlds biggest banks

of the S&P Top 50 Companies

of the Fortune 50 Companies